Apple introduces AI model that edits photos with text instructions

Apple's research division, in collaboration with researchers from the University of California, Santa Barbara, has introduced the multimodal artificial intelligence model MGIE for image editing. Users can make changes to an image by simply describing what they want in natural language.

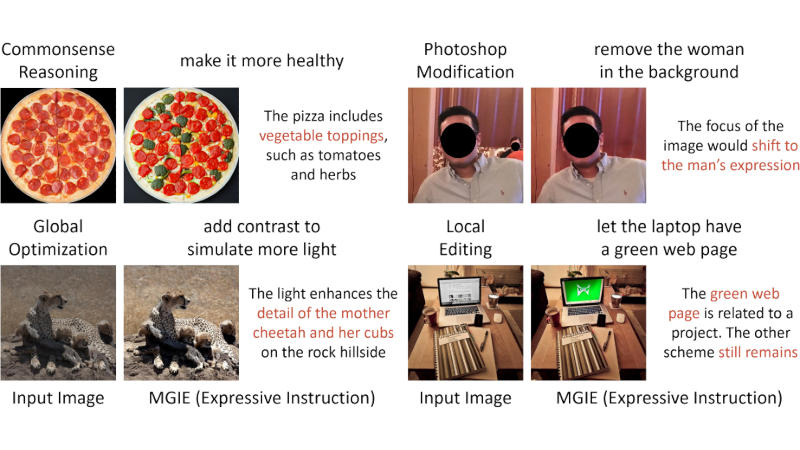

MGIE (Multimodal Large Language Model-Guided Image Editing) can be used for various image editing tasks, such as adding or removing objects. When given a command, the model interprets the user's words and then "imagines" how the altered image will correspond to those instructions.

The article describing MGIE provides several examples of its functionality. For instance, when editing a photo of pizza with the request to "make it healthier," the model added vegetable toppings. In another case, when presented with an overly dark picture of a cheetah in the desert and asked to "add contrast, simulating more light," the image became brighter.

MGIE is available for free download on GitHub, and users can try it out on the Hugging Face Spaces platform. Apple has not specified the company's plans for the model beyond the research project.

While image editing is supported by some AI generators like OpenAI DALL-E 3, and generative AI features exist in Photoshop through the Adobe Firefly model, Apple does not position itself as a major player in the field of artificial intelligence, unlike Microsoft, Meta, or Google. However, Apple's CEO, Tim Cook, has stated that new AI features will be added to their devices this year. In December of last year, the company released the open platform MLX for training AI models on Apple Silicon chips.

.jpg)

- Related News

- OpenAI has introduced SearchGPT, an AI-powered search engine

- Musk claims humanoid robots will be used in Tesla in 2025

- Designer: Microsoft introduces new service for generating images

- OpenAI introduces GPT-4o Mini: What is it capable of?

- Synchron integrates ChatGPT with human brain

- Beta version of WhatsApp for Android already converts voice messages to text

- Most read

month

week

day

- Buyers massively complain about Samsung's Galaxy Buds 3 and Buds 3 Pro headphone, even finding hair in the box 822

- With today's mortgage interest rates, banks simply cannot sell products in 2025: Interview with Vardan Marutyan 813

- Ants and bees 'taught' tiny drones to navigate without GPS, beacons or lidars 677

- Samsung will release Galaxy Tab S10+ and Ultra tablets and Galaxy Z Fold 6 Slim and Galaxy w25 smartphones in October 642

- Mass production of iPhone SE 4 will begin this October, with sales starting in 2025 603

- Insider unveils specs of all smartphones of iPhone 17 series, including the 17 Slim 600

- Telegram's monthly active audience reaches 950 million 586

- What dangers can feature phones from unknown manufacturers conceal? 559

- For the first time in history, iPhone will get a Samsung camera 556

- What are the problems in the construction industry? Interview with Vardan Marutyan 552

- Archive