OpenAI introduces GPT-4: It can comment on pictures, solve problems better than ChatGPT

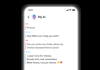

OpenAI has introduced the GPT-4, a new artificial intelligence (AI) model that can comment on pictures and texts. You can, for example, show this model a picture of some meme and ask what it's about, and it will explain.

You can also ask this new AI model what is unusual about the featured photo and you will get a clear and understandable explanation.

GPT-4 can comment also on relatively complex pictures; for example, it can recognize from a photo a Lightning cable adapter connected to an iPhone.

How is GPT-4 different from GPT-3.5?

The GPT-3.5 model only worked with text, while GPT-4 can "understand" both text and images. According to the developers, it even works at "human level" in various professional and academic tests. GPT-4 scored of 88% or higher on the Uniform Bar Exam, LSAT, SAT Math, and SAT Evidence Based Reading & Writing exams. Few can achieve such results.

GPT-4 took about six months to develop, with OpenAI using competing testing software and enlisting the help of its other product, the ChatGPT. As a result, it was possible to get a model that does not answer 82% of prohibited content queries and generates 40% more correct answers.

As per the developers, the difference between GPT-3.5 and GPT-4 can be almost imperceptible in normal dialog. But if you give both models a more complex task, GPT-4 will be more reliable and creative; it will be able to process much more complex and precise instructions than GPT-3.5.

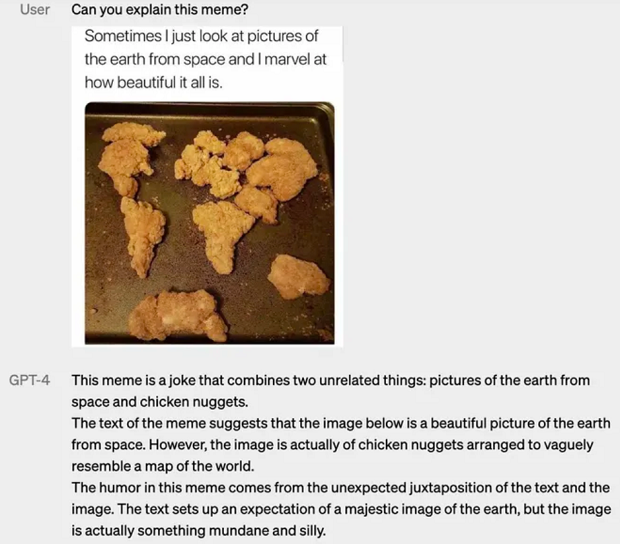

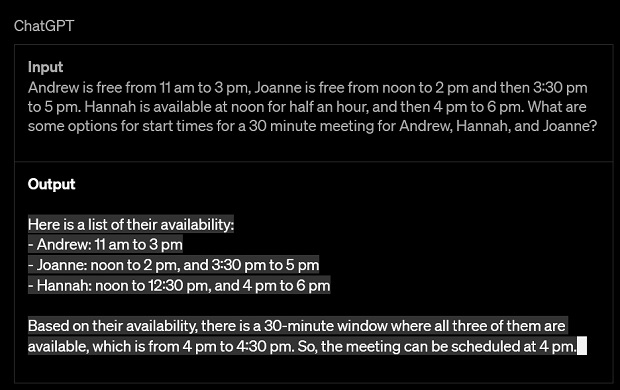

If you give the same task to GPT-4 and ChatGPT—which is based on GPT-3.5—, the answer of GPT-4 will be more accurate and valid.

Here's how the two AI models answer the question when Andrew, Joanna, and Hannah can meet for half an hour if Andrew is free from 11am to 3pm, and Joanna is free from noon to 2pm, and then from 3:30 to 5pm, with Hannah available for half an hour in the afternoon and then from 4 to 6pm.

Virtual volunteer

OpenAI is now testing its new creation with Be My Eyes. A new Virtual Volunteer feature based on GPT-4 can answer questions about images sent to it.

For example, the user can show this AI a photo of the contents of his refrigerator, and the virtual volunteer can not only correctly determine what products are in the refrigerator, but also analyze what can be made from them. Next, it will offer a series of recipes and a step-by-step guide to making them.

It's still not perfect

According to the developers, GPT-4 is still far from perfect and prone to errors. For example, a chatbot once referred to Elvis Presley as "an actor's son," which is clearly incorrect.

Sometimes a chatbot can make simple logical errors in its judgments. It can also be overly gullible and accept obviously false statements made by the user as truth.

Additionally, like ChatGPT, GPT-4 is not aware of the events that have occurred since September 2021. However, compared to GPT-3.5, the new model has obvious advantages.

According to OpenAI CEO Sam Altman, GPT-4 will not be the largest linguistic model. Moreover, in his opinion, humanity is still far from the development of AI which will be perfect.

How to get access to GPT-4?

GPT-4 is available via the OpenAI API via a waitlist queue as well as the ChatGPT's premium plan of ChatGPT Plus.

- Related News

- Google's Gemini app is already available for older versions of Android

- Google fires 28 employees who protested against company's cooperation with Israel

- Meta unveils Llama 3 and claims it's the "most powerful" open source language model

- WhatsApp to integrate AI function: What will it do?

- What are the dangers of children's communication with neural networks, voice assistants?

- Boston Dynamics introduces new version of Atlas robot that is completely electric։ Where will it be applied?

- Most read

month

week

day

- Once in a lifetime phenomenon: This year we will observe a star explosion that occurred 3,000 years ago 931

- How DNA analysis helps to solve crimes and who can sell your DNA data and why: Interview with former FBI scientist Bruce Budowle 779

- 5 best smartphones with IPS screens 774

- Huge battery, IP68/IP69K and MIL-STD-810H certifications: Ulefone will present the Armor Pad 3 Pro tablet (photo) 762

- 5 original buildings with curious optical illusions (photos) 613

- How will new technologies change future of finance? Interview with director of Apricot Capital (video) 597

- What risks do crypto and digital currencies pose? Interview with Rasmus Nielsen 597

- Instead of destroying nearly 100,000 old iPhones, Canadian company sold them in China 586

- Metal roofs: What makes them a great choice for small homes? 579

- WhatsApp will get new and useful feature 554

- Read also

- Archive