Google introduces Gemini, a major competitor to GPT-4 that understands images, video and audio in addition to text

Google has launched a new artificial intelligence (AI) model called Gemini, aiming to enhance the company's AI capabilities and challenge competitors, including OpenAI's ChatGPT. Google CEO Sundar Pichai stated that the introduction of the new algorithm marks the beginning of a new era of artificial intelligence within the company.

"One remarkable aspect of this is that you can work on a foundational technology and improve it, and it instantly permeates across all our products," said Pichai, adding that this AI model will eventually be integrated into Google's search system, advertising products, Chrome browser, and other services.

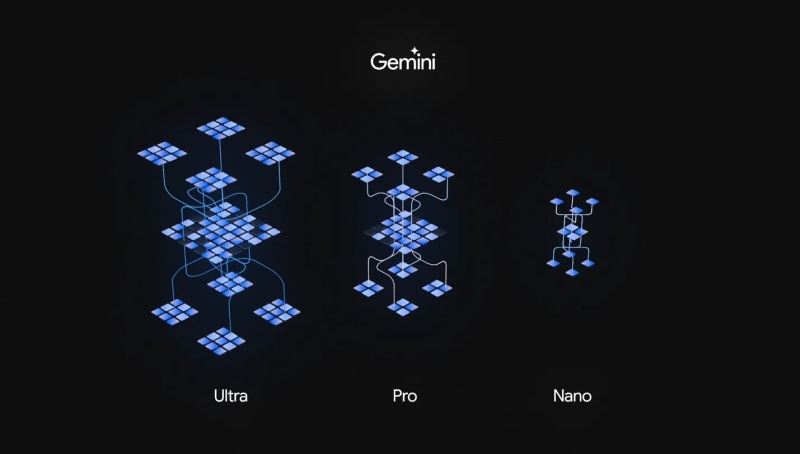

Gemini goes beyond being just a language model. There is Gemini Nano, a lighter version designed for standalone operation on Android devices. Additionally, there is Gemini Pro, a more powerful version that will serve as the foundation for many Google services, including the Bard chatbot. Furthermore, Google has developed Gemini Ultra, the company's most powerful language model, primarily intended for use in data processing centers and integration with corporate applications.

The company is introducing its new AI model to the consumer market in several ways. The Bard chatbot is already operational with Gemini Pro, and Pixel 8 Pro users will gain access to several new features through integration with Gemini Nano. Gemini Ultra will be available for purchase next year. Gemini will be accessible to developers and corporate clients starting December 13 through the Pro Google Generative AI Studio or Vertex AI on Google Cloud. Currently, Gemini can process requests only in English, but support for other languages is expected in the future.

During the Gemini presentation, Google DeepMind CEO Demis Hassabis stated that Google conducted a detailed comparative analysis of its language model with GPT-4, the contemporary neural network underlying ChatGPT. "We conducted a very thorough comparative analysis of the systems. I think we've made significant advancements across 30 out of 32 metrics," said Hassabis. He also noted that in some tests, Gemini's advantage over GPT-4 is minimal, while in others, it is more noticeable.

The most apparent advantage of Gemini in these tests was its ability to understand visual and audio content and interact with it. This aligns with Google's initial goal, as the company did not create separate AI models for processing video and sound, as OpenAI did with DALL-E and Whisper. From the beginning, Google worked on creating a unified model capable of recognizing images and sounds. Currently, basic versions of Gemini support text input and output, but more powerful algorithm versions, such as Gemini Ultra, can work with images, videos, and audio materials. Of course, these models still have hallucinations and are not without biases and other issues, but over time, Google plans to improve them.

Despite the tests conducted by developers, the ultimate test for Gemini will come from ordinary users looking to use the algorithm for information retrieval, content creation, coding, and many other purposes. Regarding code generation, Google's algorithm uses the new AlphaCode 2 system, which the company claims performs better than 85% of competitors and 50% better than the original AlphaCode algorithm.

Equally important for Google is that Gemini may be its most efficient model. It was trained using Google's tensor processors, allowing it to operate faster and more efficiently than the company's previous algorithms, such as PaLM. Alongside the new language model, Google introduced TPU v5p accelerators designed for use in data processing centers for training and running large language models.

- Related News

- Artificial intelligence can recognize human emotions by voice: Where can it be used?

- Eight major newspapers sue OpenAI and Microsoft for illegally using their content for AI development

- Chinese startup introduces robot that prepares, serves, pours wine, irons, and folds ironed clothes

- Google's Gemini app is already available for older versions of Android

- Google fires 28 employees who protested against company's cooperation with Israel

- Meta unveils Llama 3 and claims it's the "most powerful" open source language model

- Most read

month

week

day

- Xiaomi unveils exclusive Redmi Note 13 Pro+ dedicated to Messi and Argentina national team 998

- Internet 500 times faster than 5G tested in Japan: It allows to transfer five movies in HD resolution in one second 839

- Which smartphones will be the first to receive Android 15? 751

- What will happen to the Earth if the Moon disappears? 747

- iPhone 16 may get colored matte glass back panel, 7 colors 722

- Great value for money: 3 best Realme smartphones 712

- WhatsApp receives two new features 680

- Why it is recommended to download applications on trusted platforms: In 2023, Google rejected publication of more than 2 million dangerous applications on Google Play 633

- Instagram is changing its approach to content recommendations: How will the application help promote original content and fight reposters? 599

- How to realize that your smartphone is hacked and you are being surveilled? 566

- Archive