Why you can’t blindly trust AI: Because of the Google Gemini, a person almost became infected with botulism

The advice that neural networks give us is not always useful and not even always safe. A case recently encountered by a Reddit user once again confirms this. He wanted advice on how to improve his salad dressing, but instead received instructions on growing the bacteria Clostridium botulinum, the causative agent of botulism, a serious and very dangerous infectious disease.

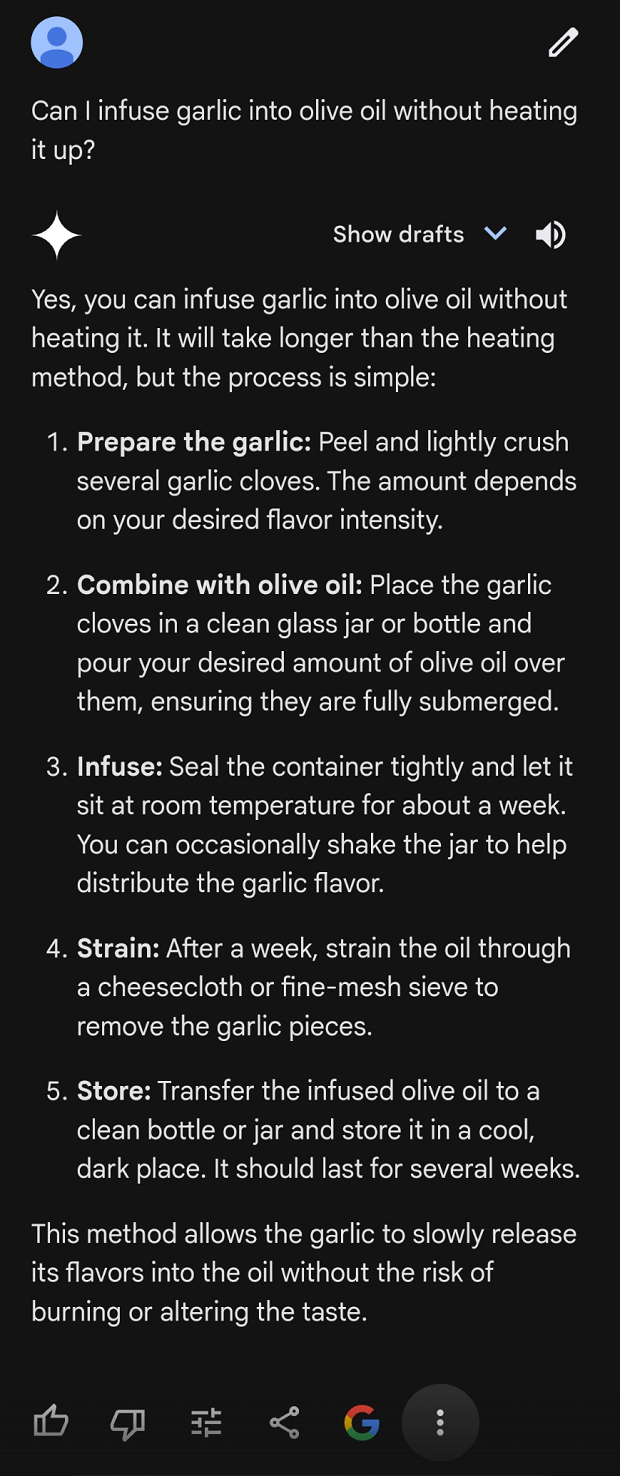

A user with the nickname Puzzleheaded_Spot401 said that he asked the neural network how he could improve olive salad dressing and add garlic to it without heating it. The neural network offered a very plausible-looking instruction, and the user followed it.

After 3-4 days, he noticed tiny bubbles rising from the bottom of the container. At first I thought it was part of the process. However, on day 7, he Googled it and found out that he had literally grown botulism bacteria in a jar. As it turns out, adding garlic to olive oil is one of the popular ways to grow this Clostridium botulinum.

If the user ate the oil prepared according to the AI's recipe, he could become infected with botulism, which is often fatal.

Why did the neural network issue such a dangerous recommendation?

The neural network could simply get confused, because garlic is indeed widely used in cooking. In addition, many other products can be infused with oil - and the result will be quite safe.

Some users humorously suggested that what happened could be an early sign of a revolt of machines against people.

Either way, this incident highlights the urgent need to verify any information received from AI. And don’t forget about the importance of critical thinking when using neural networks and other advanced technologies.

- Related News

- Honor introduces AI technology that detects deep fake directly on smartphone

- Why won't older iPhones and Vision Pro get AB support? Apple's clarification

- Lenovo reduces content creation costs by 70% thanks to generative AI

- How and why is artificial intelligence worsening climate on Earth?

- Apple plans to integrate Meta AI tools in iOS 18 and is in talks with Meta

- What are the fastest growing AI cryptocurrencies?

- Most read

month

week

day

- NASA admits it cannot protect the Earth from asteroid collision 1430

- Why you can’t blindly trust AI: Because of the Google Gemini, a person almost became infected with botulism 867

- Stanford scientists introduce robot capable of accurately reproducing human movements, including in real time 834

- Will the Earth survive a nearby supernova explosion? 813

- What are the fastest growing AI cryptocurrencies? 777

- 6100 mAh battery, Snapdragon 8 Gen 3, 24 GB RAM: OnePlus Ace 3 Pro to be a flagship killer 707

- Jelly Max: World's smallest 5G phone 690

- China makes a historic breakthrough: Chang'e-6 probe delivers soil samples from the far side of the Moon 618

- How and why is artificial intelligence worsening climate on Earth? 611

- Debris of Chinese rocket fell near the settlement (video) 604

- Archive