AI teaches robot to balance on ball: It teaches the robot better than humans

A group of scientists from the University of Pennsylvania has developed the DrEureka system designed for training robots using large language models of artificial intelligence like OpenAI GPT-4. It turns out to be a more efficient method than a sequence of real-world tasks, but it requires special attention from humans due to the peculiarities of AI "thinking."

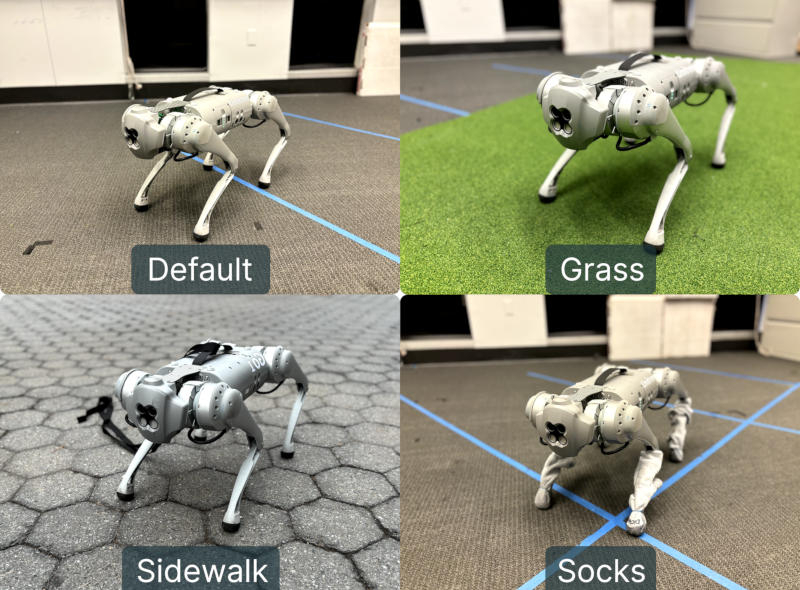

The DrEureka platform (Domain Randomization Eureka) has proven its effectiveness using the Unitree Go1 robot - a quadrupedal open-source machine. It involves training the robot in a simulated environment by randomizing key variables such as friction coefficients, mass, damping, center of gravity offset, and other parameters. Based on several user requests, the AI generates code describing the reward and penalty system for training the robot in a virtual environment. After each simulation, the AI analyzes how well the virtual robot performed the task and how its performance can be improved. It's important to note that the neural network can rapidly generate scenarios in large volumes and execute them simultaneously.

The AI creates tasks with maximum and minimum parameter values at failure or mechanism breakdown points, achieving or exceeding which results in a reduction in the score for completing the training scenario. The research authors note that additional safety instructions are needed for the correct coding by AI; otherwise, the neural network starts to "cheat" in pursuit of maximum performance, which in the real world can lead to engine overheating or damage to the robot's limbs. In one of these unnatural scenarios, the virtual robot "discovered" that it could move faster by disabling one leg and starting to move on three.

Researchers instructed the AI to exercise special caution, considering that the trained robot would undergo tests in the real world. Therefore, the neural network created additional safety features for aspects such as smooth movements, horizontal orientation, torso height, and torque consideration for electric motors - it should not exceed the specified values. As a result, the DrEureka system succeeded in training the robot better than humans: the machine showed a 34% increase in movement speed and a 20% increase in the distance traversed over rough terrain. Researchers explained this difference in approaches by noting that when humans train, they break down the task into stages and find a solution for each of them, whereas GPT trains everything at once, a feat humans are clearly unable to replicate.

The DrEureka system has allowed a transition from simulation directly to real-world work. Project authors claim they could further enhance the platform's efficiency by providing AI with feedback from the real world - for this, neural networks would need to analyze video recordings of tests, not just error analysis in the robot's system logs. It takes an average person up to 1.5 years to learn to walk, and only a few can move on a yoga ball. The trained DrEureka robot effectively handles even this task.

- Related News

- Gemma 2, Gemini 1.5 Flash and Pro, powerful AI image generator: What AI products were shown to us at Google I/O 2024 event?

- Unitree unveils its new humanoid robot that cracks nuts, makes toast, does acrobatics and costs $16,000

- OpenAI presents free AI model GPT-4o: It is smarter and knows Armenian

- Artificial intelligence has learned to deceive people, MIT scientists warn

- OpenAI will introduce sarcasm-aware assistant, add audio- and videocall feature to ChatGPT

- People don't realize that AI will soon replace them en masse, which is a serious problem, says creator of ChatGPT

- Most read

month

week

day

- iPhone will warn about nearby tracking devices: Apple releases iOS 17.5, which has several new features 772

- 5 most dangerous smartphones in terms of SAR radiation levels 735

- Huge spot on Sun generating powerful flares turns away from the Earth: When will it return and when should we expect next solar storms? 703

- OpenAI presents free AI model GPT-4o: It is smarter and knows Armenian 689

- Unitree unveils its new humanoid robot that cracks nuts, makes toast, does acrobatics and costs $16,000 646

- Sony abandoned 4K and 21:9 screen: Flagship smartphone Xperia 1 VI has been presented 634

- What unpleasant feature of 2024 iPad Air’s cheap version did Apple keep silent about? 630

- Gemma 2, Gemini 1.5 Flash and Pro, powerful AI image generator: What AI products were shown to us at Google I/O 2024 event? 624

- Xiaomi introduces inexpensive gas stove with number of smart features 594

- Apple will make revolutionary changes to Siri 586

- Archive